What could be the best possible way to merge two separate DHIS2 set-ups into one system.

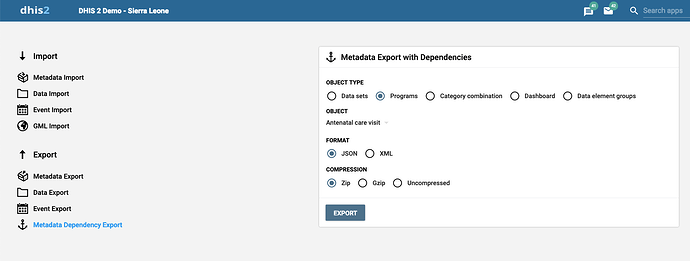

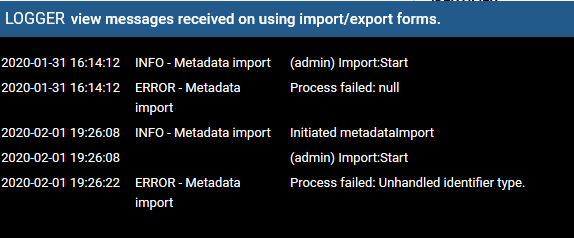

I have two separate setups with different Tracker capture programs implemented on them, with the same postgres version (Veision 10.7) and on DHIS2 2.30. I want to merge the two applications. I tried using metadata import/export but on import i get the following message on the Logger-view console:

2019-03-05 21:44:04

INFO - Metadata import

Initiated metadataImport

44:15

(admin) Import:Start

44:36

(admin) Import:Done took 20.75 seconds

44:37

Created: 0, Updated: 0, Deleted: 0, Ignored: 782, Total: 782

I also get the following of my logs:

* INFO 2019-03-05 21:49:36,686 Skipping unknown property 'system'. (DefaultRenderService.java [http-bio-9000-exec-10])

* INFO 2019-03-05 21:49:37,050 (admin) Import:Start (DefaultMetadataImportService.java [taskScheduler-20])

* INFO 2019-03-05 21:49:37,057 [Level: INFO, category: METADATA_IMPORT, time: Tue Mar 05 21:49:37 CAT 2019, message: (admin) Import:Start] (InMemoryNotifier.java [taskScheduler-20])

* INFO 2019-03-05 21:49:42,554 (admin) Import:Preheat[REFERENCE] took 5.48 seconds (DefaultPreheatService.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,277 Validation of 'Credentials expiry alert' failed. (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,288 [ErrorReport{message=Failed to add/update job configuration - Another job of the same job type is already scheduled with this cron expression, errorCode=E7000, mainKlass=class org.hisp.dhis.scheduling.JobConfiguration, errorKlass=null, value=null}, ErrorReport{message=Failed to validate job runtime - `EMAIL gateway configuration does not exist`, errorCode=E7010, mainKlass=class org.hisp.dhis.credentials.CredentialsExpiryAlertJob, errorKlass=null, value=null}] (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,447 Validation of 'Dataset notification' failed. (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,447 [ErrorReport{message=Failed to add/update job configuration - Another job of the same job type is already scheduled with this cron expression, errorCode=E7000, mainKlass=class org.hisp.dhis.scheduling.JobConfiguration, errorKlass=null, value=null}] (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,548 Validation of 'Data statistics' failed. (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,549 [ErrorReport{message=Failed to add/update job configuration - Another job of the same job type is already scheduled with this cron expression, errorCode=E7000, mainKlass=class org.hisp.dhis.scheduling.JobConfiguration, errorKlass=null, value=null}] (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,732 Validation of 'File resource clean up' failed. (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,732 [ErrorReport{message=Failed to add/update job configuration - Another job of the same job type is already scheduled with this cron expression, errorCode=E7000, mainKlass=class org.hisp.dhis.scheduling.JobConfiguration, errorKlass=null, value=null}] (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,866 Validation of 'Kafka Tracker Consume' failed. (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:43,866 [ErrorReport{message=Failed to add/update job configuration - Trying to add job with continuous exection while there already is a job with continuous exectution of the same job type., errorCode=E7001, mainKlass=class org.hisp.dhis.scheduling.JobConfiguration, errorKlass=null, value=null}] (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:44,012 Validation of 'Remove expired reserved values' failed. (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:44,012 [ErrorReport{message=Failed to add/update job configuration - Another job of the same job type is already scheduled with this cron expression, errorCode=E7000, mainKlass=class org.hisp.dhis.scheduling.JobConfiguration, errorKlass=null, value=null}] (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:44,104 Validation of 'Validation result notification' failed. (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:44,105 [ErrorReport{message=Failed to add/update job configuration - Another job of the same job type is already scheduled with this cron expression, errorCode=E7000, mainKlass=class org.hisp.dhis.scheduling.JobConfiguration, errorKlass=null, value=null}] (JobConfigurationObjectBundleHook.java [taskScheduler-20])

* INFO 2019-03-05 21:49:44,260 (admin) Import:Validation took 1.38 seconds (DefaultObjectBundleValidationService.java [taskScheduler-20])

* INFO 2019-03-05 21:49:44,297 (admin) Import:Done took 7.25 seconds (DefaultMetadataImportService.java [taskScheduler-20])

* INFO 2019-03-05 21:49:44,298 [Level: INFO, category: METADATA_IMPORT, time: Tue Mar 05 21:49:44 CAT 2019, message: (admin) Import:Done took 7.25 seconds] (InMemoryNotifier.java [taskScheduler-20])

kindly assist.