The DHIS2 Level 1 Data Use Academy was designed to allow for participants to improve their data analysis, data quality, and data use skills and knowledge. In global and regional trainings, either anonymized or generated organisation units, data elements, indicators and data have been used to perform this type of training previously. This resulted in difficulty in transferring skills back to the country and this academy was eventually deprecated and replaced with Level 1 Analytics tools.

The necessity to tailor data use training to increase skills using the countries live data and database has been understood for some time. Indonesia was therefore identified as the first country to pilot more standardized approaches to training which could be adapted in-country in order to support the implementation of data use training in additional countries going forward.

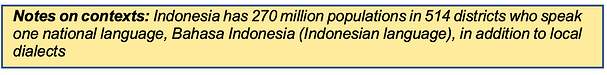

Fig 1. Exercising data analysis with data visualizer app

Why adapt the regional academy into a country specific academy?

In Indonesia, DHIS2 (localled called ASDK) acts as the integrated data warehouse, pulling data from multiple programs including HIV, TB, Malaria, MCH, Immunisation, etc. An assessment conducted in 2018 showed that many of the dashboards encountered critical problems that hindered optimal data and information use for program monitoring, planning, and decision making.

By adapting the regional Level 1 Data Use Academy to be used within the local context, the implementation team expected more meaningful capacity building that would allow for the participants to work with their live data. This allowed for real challenges with data quality to be reviewed along with conclusions regarding program performance to be examined in greater detail than is allowed within a regional or global academy.

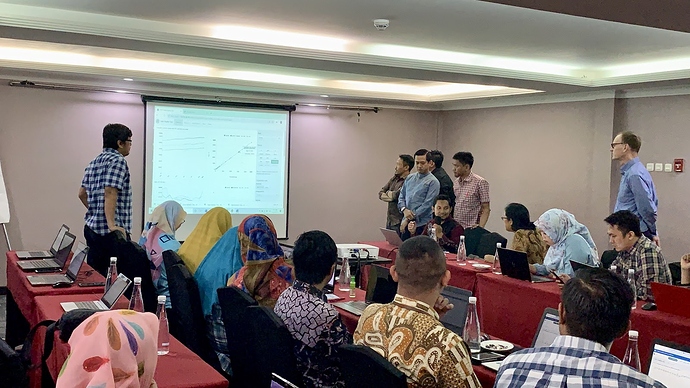

Figure 2. Each health programme presented data elements, indicators, and analytics that routinely monitored

How did it work?

The in-country academy is based on 5 key principles as identified within the WHO guidelines for facility analysis:

- Identifying/reviewing the key indicators used in the program

- Reviewing the quality of the data collected in the program

- Reviewing the targets/denominators that are used in the program

- Visualize and interpret/analyse the data in the program

- Communicate and use the findings to enhance program data quality and service delivery

The agenda and curriculum were therefore made to ensure all of these core principles were covered during the training.

Figure 3. Participants tested their understanding by explaining what they have learned to other participants

What was done differently?

Several adoptions were made to the academy including:

- At the beginning of the academy, each health program from the Ministry (such as HIV, TB, Immunisation, HRH) presented their data use (visualisation, analytics, and monitoring) routines. On the last day, reflecting on the academy, they proposed how ASDK/DHIS2 can be used to further enhance program review of data

- We used the local database (data elements, indicators, maps, data values, population targets and estimates) for demonstrations and hands-on exercises

- Local trainers trained by WHO and University of Oslo facilitated the majority of sessions, using local language whenever possible.

Figure 4. HIV program staff proposed ASDK use for HIV data review

Conclusion

- In-country data use training’s have a stronger impact than regional or global training, but must be well structured using adult learning principles to apply well researched training methodologies in a localized context. A detailed review of the data to identify data quality issues and create relevant analytical outputs that can be reviewed and discussed is needed prior to this type of training; sufficient time for these various tasks should be alloted.

This use case was prepared by Aprisa Chrysantina and Shurajit Dutta.