I have a dhis2 instance where we had new orgunits and we added to them to a dataset.

but we never managed to get their completeness/timeliness informations from the analytics api.

After a few hours of investigation we “collected” the sql run by dhis2 via pg_stat_statements.

the job doesn’t seem to swap the analytics_completenesstarget_temp

drop table if exists analytics_completenesstarget_temp cascade

create table analytics_completenesstarget_temp ("mXJvqnCbpdu" character(11),"GdgsoyjCb6p" character(11),"Agr9GjmspRS" character(11),"qGLVb3cA8Tw" character(11),"KjfJ9g9ohiR" character(11),"KV8VpFkC5EM" character(11),"aID5Jv1y80k" character(11),"nTXcyv9tHOz" character(11),"bYzw7CILYVt" character(11),"ub882X5co8g" character(11),"cuob2ISPULh" character(11),"RNqOqEup3LK" character(11),"ou6a8FgCXh2" character(11),"uidlevel1" character(11),"uidlevel2" character(11),"uidlevel3" character(11),"uidlevel4" character(11),"uidlevel5" character(11),"ouopeningdate" date,"oucloseddate" date,"costartdate" date,"coenddate" date,"dx" character(11) not null,"ao" character(11) not null,"value" double precision) with(autovacuum_enabled = false)

insert into analytics_completenesstarget_temp ("mXJvqnCbpdu","GdgsoyjCb6p","Agr9GjmspRS","qGLVb3cA8Tw","KjfJ9g9ohiR","KV8VpFkC5EM","aID5Jv1y80k","nTXcyv9tHOz","bYzw7CILYVt","ub882X5co8g","cuob2ISPULh","RNqOqEup3LK","ou6a8FgCXh2","uidlevel1","uidlevel2","uidlevel3","uidlevel4","uidlevel5","ouopeningdate","oucloseddate","costartdate","coenddate","dx","ao","value") select ougs."mXJvqnCbpdu",ougs."GdgsoyjCb6p",ougs."Agr9GjmspRS",ougs."qGLVb3cA8Tw",ougs."KjfJ9g9ohiR",ougs."KV8VpFkC5EM",ougs."aID5Jv1y80k",ougs."nTXcyv9tHOz",ougs."bYzw7CILYVt",ougs."ub882X5co8g",ougs."cuob2ISPULh",ougs."RNqOqEup3LK",ougs."ou6a8FgCXh2",ous."uidlevel1",ous."uidlevel2",ous."uidlevel3",ous."uidlevel4",ous."uidlevel5",ou.openingdate,ou.closeddate,doc.costartdate,doc.coenddate,ds.uid,ao.uid,$1 as value from _datasetorganisationunitcategory doc inner join dataset ds on doc.datasetid=ds.datasetid inner join organisationunit ou on doc.organisationunitid=ou.organisationunitid left join _orgunitstructure ous on doc.organisationunitid=ous.organisationunitid left join _organisationunitgroupsetstructure ougs on doc.organisationunitid=ougs.organisationunitid left join categoryoptioncombo ao on doc.attributeoptioncomboid=ao.categoryoptioncomboid left join _categorystructure acs on doc.attributeoptioncomboid=acs.categoryoptioncomboid

but never found the statement swapping the analytics_completenesstarget_temp to analytics_completenesstarget

so we manually run the script upper and then launched the 2 extra sql

drop table if exists analytics_completenesstarget cascade;

alter table analytics_completenesstarget_temp rename to analytics_completenesstarget

and voila analytics api started returning results for these new orgunits.

so question

- is this a known bug (I haven’t seen a bug report about that)

- it’s on a 2.33 which is no more supported from what I found

- is there circumstances where the swap might be skipped ?

- the code supposed to swap the tables is called “executeSilently” so I fear it’s hiding the problem ?

the analytics log

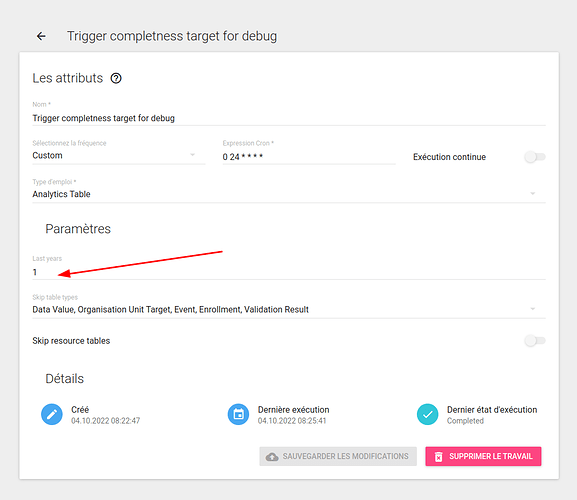

* INFO 2022-10-03 14:44:50,561 Analytics table update parameters: AnalyticsTableUpdateParams{last years=1, skip resource tables=false, skip table types=[EVENT, DATA_VALUE, ORG_UNIT_TARGET, ENROLLMENT, VALIDATION_RESULT], start time=2022-10-03T14:40:00} (DefaultAnalyticsTableService.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,561 Starting update of type: COMPLETENESS_TARGET, table name: 'analytics_completenesstarget', processes: 2: 00:00:00.000 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,563 Table update start: analytics_completenesstarget, earliest: 2022-01-01T00:00:00, parameters: AnalyticsTableUpdateParams{last years=1, skip resource tables=false, skip table types=[EVENT, DATA_VALUE, ORG_UNIT_TARGET, ENROLLMENT, VALIDATION_RESULT], start time=2022-10-03T14:40:00}: 00:00:00.001 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,564 Performed pre-create table work: 00:00:00.002 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,566 Dropped temp tables: 00:00:00.004 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,566 Creating table: analytics_completenesstarget_temp, columns: 24 (AbstractJdbcTableManager.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,573 Created analytics tables: 00:00:00.011 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,573 Populate table task number: 1 (DefaultAnalyticsTableService.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,908 Populate analytics_completenesstarget_temp in: 0.33 seconds (AbstractJdbcTableManager.java [taskScheduler-11])

* INFO 2022-10-03 14:44:50,908 Populated analytics tables: 00:00:00.346 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,925 Invoked analytics table hooks: 00:00:00.363 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,951 Applied aggregation levels: 00:00:00.389 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:50,951 No of analytics table indexes: 24 (DefaultAnalyticsTableService.java [taskScheduler-12])

* INFO 2022-10-03 14:44:52,471 Created indexes: 00:00:01.909 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:52,700 Analyzed tables: 00:00:02.138 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:52,700 Removed updated and deleted data: 00:00:02.138 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:52,708 Swapping table, master table exists: true, skip master table: true (AbstractJdbcTableManager.java [taskScheduler-12])

* INFO 2022-10-03 14:44:52,943 Table update done: analytics_completenesstarget: 00:00:02.381 (Clock.java [taskScheduler-12])

* INFO 2022-10-03 14:44:52,943 Analytics tables updated: 00:04:52.642 (Clock.java [taskScheduler-12])

what is “skip master table” flag ?